ANN: Introduction

Artificial Neural Networks (ANNs)

Artificial Neural Networks (ANNs) are computational models inspired by the biological neural networks found in human brains, designed to process complex data and perform tasks such as classification, prediction, and pattern recognition.

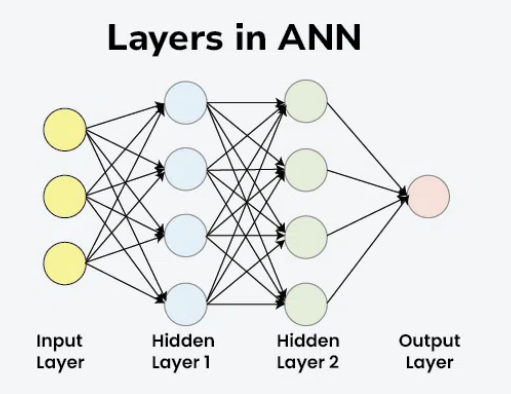

Their architecture typically comprises three layers:

- an

input layerthat receives data, - one or more

hidden layersthat perform processing through interconnected nodes (neurons), - and an

output layerthat delivers the final result.

ANNs are widely recognized for their ability to learn from large datasets, making them pivotal in various applications, including image and speech recognition, natural language processing, and medical diagnosis[1][2].

The growing significance of ANNs stems from their versatility and effectiveness across multiple domains, from enhancing customer experiences in eCommerce through personalized recommendations to advancing medical technologies by aiding in disease diagnosis and treatment planning[3][4].

However, their deployment also raises important ethical considerations, particularly regarding bias, accountability, and the implications of automated decision-making in sensitive fields such as healthcare.

As organizations increasingly integrate ANNs into their operations, issues of transparency and interpretability have emerged as critical concerns, challenging the trustworthiness of these models in high-stakes applications[5][6].

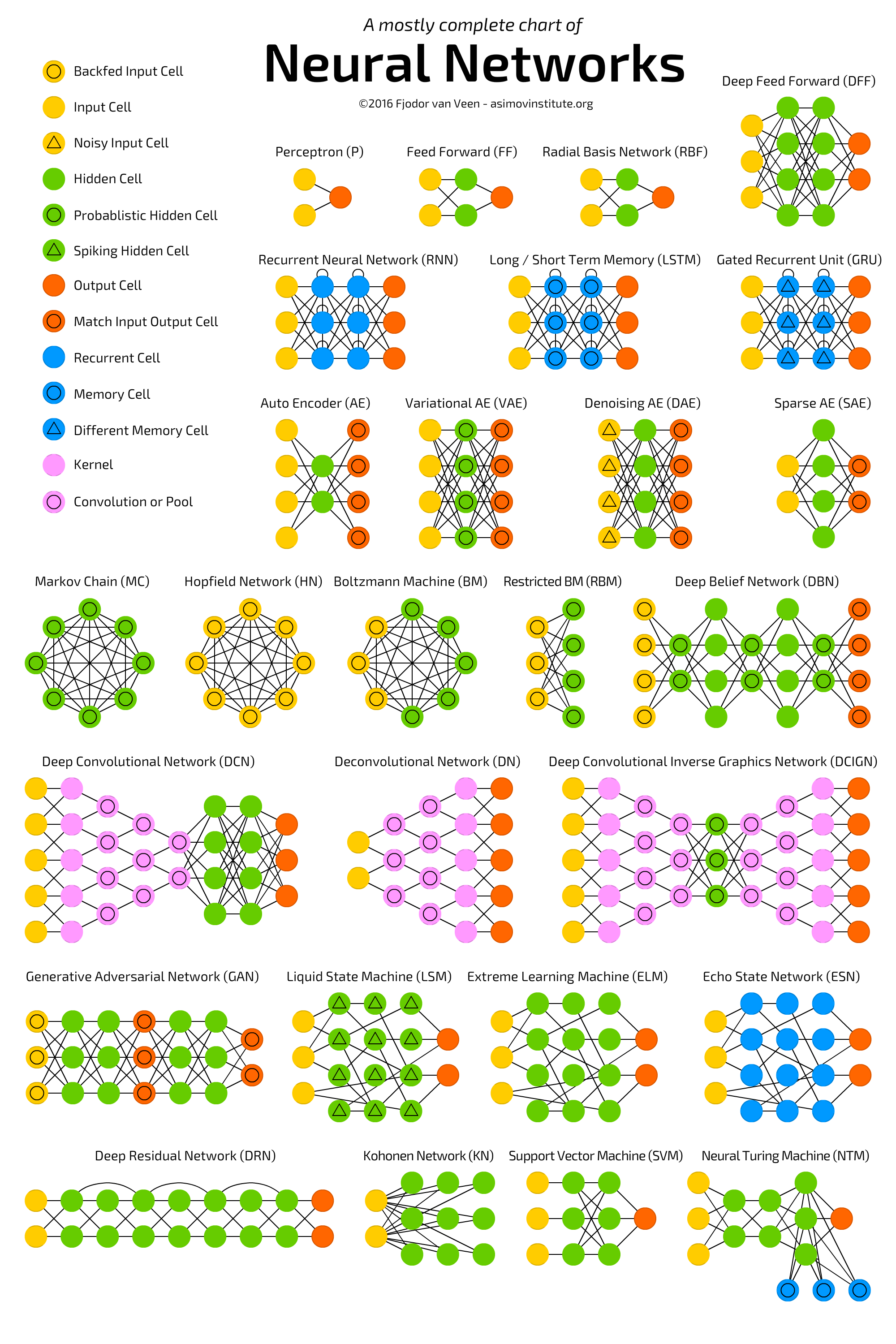

Various types of ANNs, including Feedforward Neural Networks (FNNs), Convolutional Neural Networks (CNNs), and Recurrent Neural Networks (RNNs), cater to different tasks and data structures, each offering unique advantages.

For instance, CNNs excel in image-related tasks by identifying spatial hierarchies in data, while RNNs are adept at processing sequential data, such as time series or natural language, by maintaining context across inputs[7][8].

Despite their transformative potential, ANNs face challenges, including the risk of overfitting, the requirement for extensive training data, and the computational resources needed for effective training.

Furthermore, the complex nature of ANNs often leads to their characterization as “black boxes,” making it difficult to interpret the decision-making processes involved.

As research progresses, addressing these limitations while promoting ethical practices will be essential to unlocking the full potential of ANNs across diverse applications[10][11].

Structure of ANNs

Artificial Neural Networks (ANNs)are structured to mimic the interconnected network of neurons in the human brain, facilitating complex information processing.The architecture of an ANN typically consists of three main types of layers:

the input layer,hidden layers, and theoutput layer.

Input Layer

The input layer serves as the initial point of data entry for the neural network. It receives information from the external environment in various forms such as numbers, letters, audio files, or image pixels.

Each node in the input layer corresponds to a specific feature of the data being processed, and it plays a critical role in transmitting this information to subsequent layers for further analysis[1][2].

Hidden Layers

Hidden layers are positioned between the input and output layers and are responsible for the majority of the processing within the ANN. A network may have one or multiple hidden layers, each containing numerous artificial neurons.

These layers perform complex mathematical operations on the input data, identifying patterns and relationships that are not immediately apparent. The output from one hidden layer becomes the input for the next layer, allowing for a deeper analysis of the data at each stage[1][3][2].

Types of Hidden Layers: Hidden layers can vary in their configuration and function. They may employ different types of activation functions, which introduce non-linear properties to the network, enabling it to learn complex mappings from inputs to outputs.

The choice of activation function can significantly impact the performance of the neural network in various tasks[4][5].

Output Layer

The output layer is the final layer in the ANN structure and provides the ultimate result of the computations performed by the network. Depending on the nature of the task, the output layer can contain a single node for binary classification or multiple nodes for multi-class classification problems.

Each output node generates a response based on the processed information, translating the network’s findings into actionable insights or predictions[1][3][5].

Types of ANNs

Artificial Neural Networks (ANNs) can be categorized into several key types, each designed for specific tasks and applications.

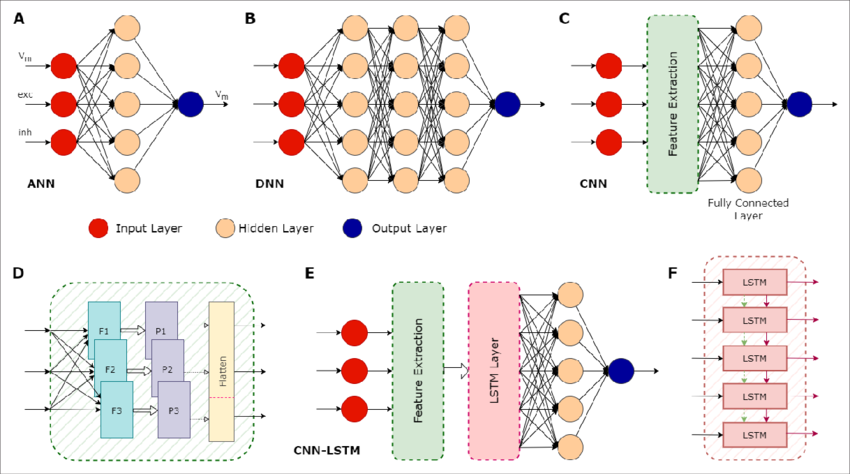

Figure 1. Illustration of various types of artificial neural networks (ANN) and their associated components

(A) A basic ANN consists of an input layer (red circles), one or more hidden layers (peach circles), and an output layer (blue circle). In the case of neuronal modelling, the input could be features such as the membrane potential (V m ), and the excitatory (exc) and inhibitory (inh) synaptic inputs. The hidden layers perform computations on the inputs, with the actual operations depending on the type of ANN. Their objective is to identify features in the inputs and use these to correlate a given input and the correct output. An ANN can have multiple outputs: in this example, the output is a prediction of the membrane potential. (B) A deep neural network (DNN) is an ANN with multiple hidden layers. (C) A convolutional neural network (CNN) is a type of DNN that can be trained to extract important features contained in the input data, which can then be used as inputs to the other hidden layers, significantly improving the performance of the overall network. (D) Some details of the feature extraction process of a CNN, which consists of several hidden layers. First, it has multiple filters (F1, F2, F3), each configured to capture specific features. This process can greatly increase the size of the data, so a pooling layer (P1, P2, P3) is then used to reduce this size. The pooling process does not lead to the loss of valuable data; instead, it helps remove noise and consolidate meaningful data. The flattening layer converts the pooled data into a 1-dimensional stream. This serves as an input for the subsequent fully connected layer, which does the final evaluation to produce the output based on the features extracted by the convolution layers. (E) A CNN with a long short-term memory (LSTM) layer. The additional LSTM layer enables the network to benefit from long-term memory, in addition to the existent short-term working memory. (F) The LSTM layer achieves this long-term memory through its ability to relay both the cell state (dashed green arrows) and the output generated by each module (solid maroon arrows) across its several modules, allowing the flow of useful information. This enables the network to better identify context in the input data over longer time periods. CNN-LSTMs have been found useful for predicting time series data.Feedforward Neural Networks (FNNs)

Feedforward Neural Networks (FNNs) represent the most basic type of neural network architecture. In an FNN, data moves in one direction—from input nodes, through hidden nodes (if present), and finally to output nodes. There are no cycles or loops; thus, information does not flow backward. This structure allows for straightforward processing and is primarily used for tasks where the output is determined solely by the input data without considering previous states[6][1].

Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) are specialized for processing structured grid data, such as images. They utilize convolutional layers that detect significant features in the input data, enabling the network to classify images or recognize patterns effectively. CNNs consist of multiple layers that perform convolutions and pooling operations to reduce dimensionality while preserving essential information, making them particularly suitable for tasks like image recognition and video analysis[7][8].

Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are designed for sequence prediction tasks, where the order of data matters, such as time-series analysis or natural language processing. RNNs have loops that allow information to persist, enabling them to remember previous inputs and utilize this context to inform their output. This architecture is particularly effective for applications involving temporal dependencies and sequential data[4].

Self-Organizing Maps (SOMs)

Self-Organizing Maps (SOMs) are a type of unsupervised learning neural network that clusters and visualizes complex data. They organize input data into a lower-dimensional representation while preserving the topological properties of the original data space. This method is useful for tasks such as market segmentation and image processing, where hidden patterns and relationships within data are revealed without predefined labels[9].

Radial Basis Function Networks (RBFNs)

Radial Basis Function Networks (RBFNs) use radial basis functions as activation functions. They are particularly effective for interpolation in multi-dimensional space. RBFNs can approximate complex functions and are commonly employed in classification tasks and function approximation, serving as a valuable tool for machine learning applications[4].

Training of NNs

The training of neural networks is a crucial process that enables these models to learn from data and make accurate predictions.

This process can be broken down into several key components, including the training methods and the iterative learning cycle.

Training Methods: Neural networks are primarily trained using three different approaches:

supervisedlearning,unsupervisedlearning,- and

reinforcementlearning.

Supervised Learning

In supervised learning, the neural network is provided with labeled training data, which includes input-output pairs. This allows the network to learn specific features by comparing its predictions against the known outputs. The goal is to minimize the difference between the predicted outputs and the actual outputs, often referred to as the loss function[10][11].

Unsupervised Learning

Unsupervised learning differs from supervised learning in that the neural network works with data that does not have labeled outputs. The primary aim is to discover the underlying structure and patterns within the input data. Techniques such as clustering and association are commonly employed in this approach[12][13].

Reinforcement Learning

Reinforcement learning enables a neural network to learn through interaction with its environment. In this framework, the network receives feedback in the form of rewards or penalties based on its actions, guiding it to develop strategies that maximize cumulative rewards over time. This method is particularly effective in areas like gaming and decision-making tasks[14][15].

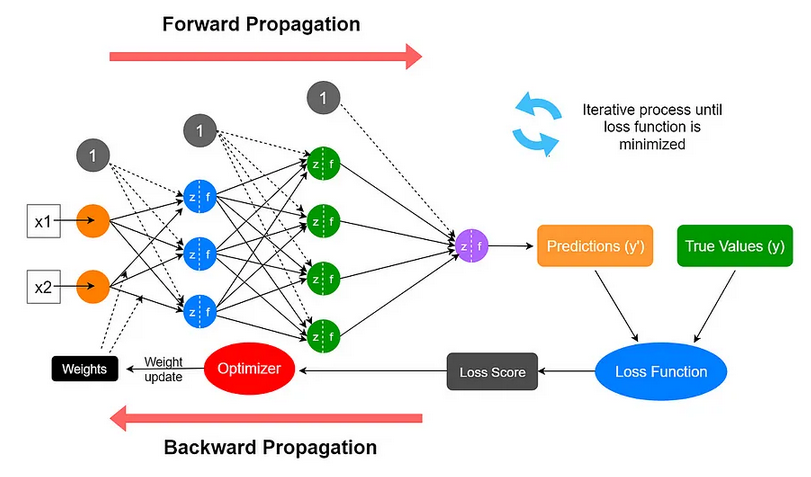

Learning Process

The learning process of a neural network is iterative and consists of three main phases:

forwardpropagation,loss functioncalculation,- and

backwardpropagation.

Forward Propagation

During forward propagation, data is passed through the layers of the network, with each neuron processing the inputs based on weighted parameters (weights and biases). The weights determine the significance of each input, while biases influence the activation of the neurons[11][14].

Calculation of the Loss Function

After forward propagation, the network’s output is evaluated against the desired output using a loss function. This function quantifies the difference between the predicted and actual values, providing a measure of how well the network is performing[11][14].

Backward Propagation

Backward propagation, or backpropagation, is the process of adjusting the weights and biases in the network based on the calculated loss. This step involves propagating the error backwards through the network, allowing the model to learn from its mistakes and improve its performance in future predictions. This iterative process continues until the loss is minimized, and the network achieves satisfactory accuracy[11][14].

By effectively combining these training methods and processes, neural networks can tackle complex tasks and make reliable predictions across various applications, including computer vision, natural language processing, and financial modeling[16][12].

Applications

Artificial Neural Networks (ANNs) have a wide range of applications across various industries, significantly enhancing operational efficiency and decision-making processes. Their versatility allows them to address complex problems in fields such as healthcare, eCommerce, computer vision, and natural language processing:

- Natural Language Processing: In the realm of

natural language processing (NLP),ANNscontribute significantly to the development of applications such as chatbots and language translation services.- Models like BERT have transformed how machines understand human language, enabling them to grasp context and semantics more effectively. This capability allows for improved customer service interactions and the automation of repetitive tasks, thereby enhancing overall productivity[17][18].

- Healthcare: In healthcare,

ANNsare utilized to analyze vast amounts of medical data, assisting in diagnosing diseases, predicting patient outcomes, and optimizing treatment plans.- For instance, neural networks can be employed to identify patterns in patient records that correlate with successful treatment outcomes, ultimately improving the quality of care provided[19].

- Additionally,

ANNapplications in imaging analysis have shown promise in detecting anomalies in medical images, such as tumors in radiology scans, thereby aiding radiologists in making accurate assessments[19].

- Additionally,

- For instance, neural networks can be employed to identify patterns in patient records that correlate with successful treatment outcomes, ultimately improving the quality of care provided[19].

- eCommerce: In the eCommerce sector,

ANNsplay a crucial role in personalizing the shopping experience.- Retail giants like

AmazonandAliExpressleverage AI-driven algorithms to recommend products based on user behavior and preferences. By analyzing browsing patterns and purchase history, these platforms can suggest related items, enhancing customer satisfaction and increasing sales[20][21]. Furthermore,ANNsare employed in inventory management and demand forecasting, helping businesses optimize stock levels and reduce costs associated with overstocking or stockouts[20].

- Retail giants like

- Computer Vision:

ANNsare foundational to advancements in computer vision, particularly in image recognition tasks. This technology enables applications such as facial recognition and object detection, which have widespread uses in security systems and social media platforms.- For instance,

convolutional neural networks (CNNs)are specifically designed to process and analyze visual data, identifying features and providing accurate descriptions of images[21][22]. As a result, businesses can streamline operations, improve customer engagement, and derive insights from visual data analytics[22].

- For instance,

Challenges and Limitations

Artificial Neural Networks (ANN) face several challenges and limitations that impact their effectiveness and applicability in various fields, particularly in healthcare and data analysis:

- Ethical Considerations: Ethical dilemmas also arise with the use of

ANNs, particularly regarding issues of bias, accountability, and the implications of automated decision-making in critical areas such as healthcare[23]. Ensuring that ANN systems are fair, transparent, and accountable remains an ongoing concern that researchers and practitioners must address as the technology continues to evolve[23]. - Overfitting and Generalization: One significant challenge associated with

ANNsis the phenomenon of overfitting, where the model becomes overly complex and tailored to the training data, failing to generalize to new, unseen data[24].- Overfitting can occur when the model captures not only the underlying patterns but also the noise present in the training dataset.

- Regularization techniques are often employed to mitigate this issue, but finding the right balance between model complexity and generalization remains a critical challenge[24].

- Interpretability and Transparency: Another major limitation of

ANNsis their poor interpretability.- Unlike traditional models, which can explicitly identify causal relationships,

ANNsoften function as “black boxes” where the reasoning behind their predictions is unclear[25]. This lack of transparency can hinder trust and acceptance, especially in sensitive applications such as healthcare, where understanding the basis for a diagnosis or treatment recommendation is crucial for both practitioners and patients[25].

- Unlike traditional models, which can explicitly identify causal relationships,

- Training Data Requirements:

ANNsrequire large amounts of training data to perform effectively, which can be a significant barrier in scenarios where data availability is limited or where privacy concerns restrict access to sensitive information[25].- Additionally, the process of training

ANNscan be time-consuming and computationally intensive, necessitating substantial computational resources and potentially leading to increased costs[25].

- Additionally, the process of training

- Implementation Challenges: The integration of

ANNsinto existing workflows and systems also presents numerous challenges. Issues related to interoperability, standardization, and the alignment ofANNswith clinical workflows can impede their successful adoption[25].- Furthermore, the complexity of model development often necessitates multiple iterations, which can be resource-intensive and may deter organizations from fully leveraging

ANNcapabilities[25].

- Furthermore, the complexity of model development often necessitates multiple iterations, which can be resource-intensive and may deter organizations from fully leveraging

Future Directions

The field of

artificial neural networks (ANNs)is rapidly evolving, with ongoing research aimed at enhancing the architecture and functionality of these systems. Future developments are anticipated to focus on biologically inspired designs that emulate the cognitive processes of the human brain. This includes creating architectures capable of both memorization and forgetting, which could lead to more efficient and adaptable learning systems[15].

As the integration of neuroscience and AI progresses, researchers speculate that the collaboration between bottom-up approaches, which build on neural circuit principles, and top-down methodologies, which leverage insights from cognitive tasks, will illuminate new pathways for creating intelligent machines[17].

Despite the advancements in ANN technology, several barriers to adoption remain. These include issues related to interoperability, privacy, and the integration of health information technology (HIT) into clinical workflows. Addressing these challenges will require a multi-faceted approach, encompassing technical innovations alongside solutions for political, fiscal, and cultural hurdles[25]. The promotion of standards and controlled terminologies is essential for the broader implementation of AI in healthcare and other sectors[25].

ANNs are already transforming industries such as finance, healthcare, and entertainment, but their potential applications are far from exhausted. As scalability improves, particularly with architectures designed to handle large datasets efficiently, new applications in areas like energy consumption forecasting and predictive analytics are likely to emerge[26]. Furthermore, the exploration of diverse types of neural networks, including feedforward, recurrent, and convolutional networks, will enable customized solutions tailored to specific challenges in various domains[27].

The future of ANNs also hinges on community-driven research efforts. Initiatives like Frontiers’ Research Topics encourage collaboration among researchers across disciplines, fostering a vibrant ecosystem for knowledge exchange and innovation[28]. This collaborative spirit is expected to accelerate the pace of discovery and application in the field of AI, opening up new opportunities for exploration and development.